Visual Search by Machine Box

With the new features of Tagbox it is possible to build your own classifier, to help you to do image recognition. But another important feature that we are going to explore is the image similarity endpoint /tagbox/similar to make a Search Engine and get images that are similar to each other.

With that endpoint you will be able to:

- Recommend images that visually similar, to a given one

- Search images based on abstract concepts, and patterns

- Cluster images on similar groups

Introducing Visual Search using Tagbox

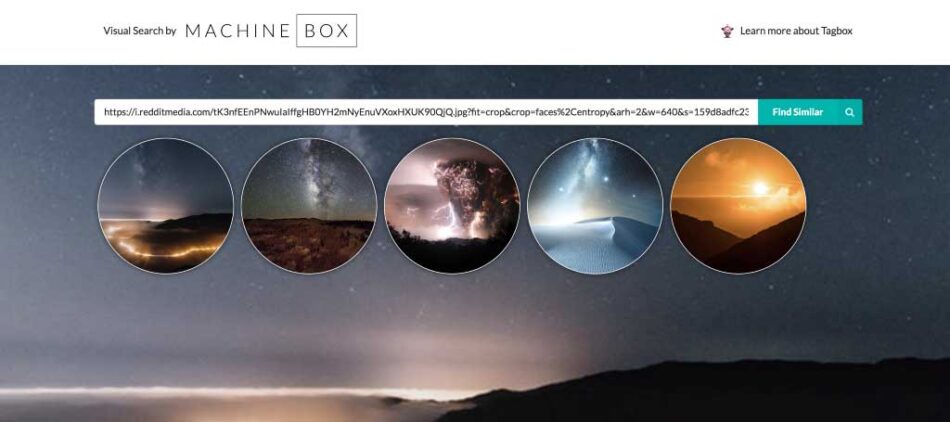

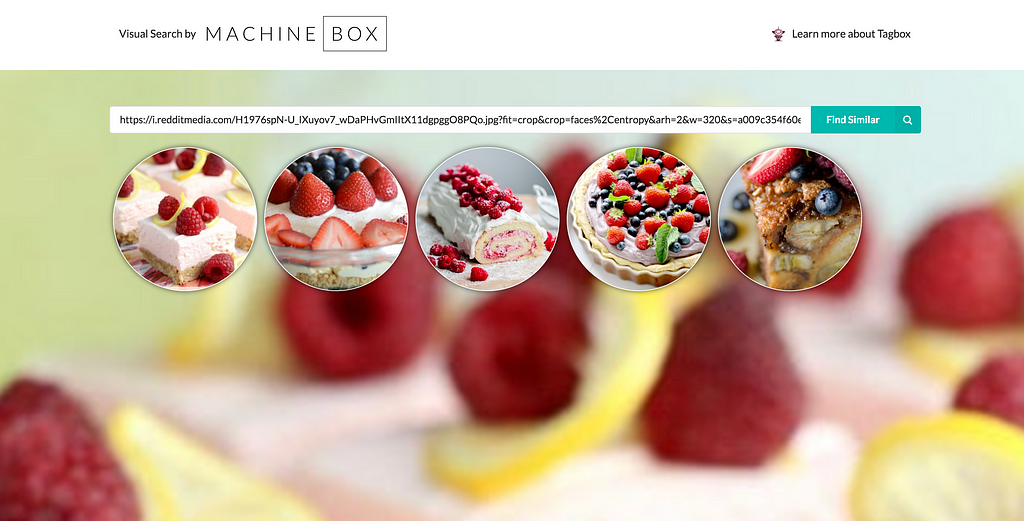

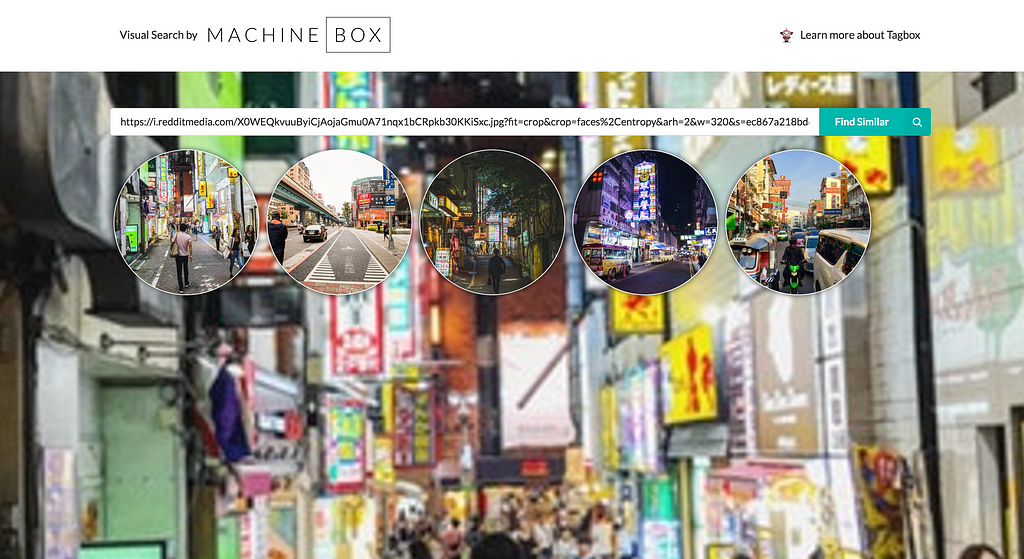

To explore the possibilities, we have put together a little app called Visual Search (is open source on Github!):

https://medium.com/media/862b49b9d1e0e707b5f0edc32a92e1bc/href

The idea of the app is very simple, on the home page it shows a random set of images from an image dataset, and if you click on an image, it finds and displays related images.

Optionally, you can paste the URL for any images on the internet to find similar images from the dataset.

With that you can create a nice, user interaction, and find patterns in your dataset.

How it works

Get the Dataset and Teach

We got around 6500 urls of images from reddit. Using reddit API we could get the thumbnails for some of the good subreddits about photography and nice pics, to name a few: food, infrastructure, “I took a picture”, pic, …

They say “getting the right data is 80% of the job”, so with all these images listed in our CSV file, we’re almost done.

Next, we’re going to run and teach Tagbox about each image.

docker run -p 8080:8080 -e "MB_KEY=$MB_KEY" machinebox/tagbox

You can sign up for a key at https://machinebox.io/account

For each image, we’ll make the following API call:

POST https://localhost:8080/tagbox/teach

{

"url": "https://i.redditmedia.com/J...MAGwY.jpg",

"id": "733cr5",

"tag": "other"

}Notice here that the tag used is other, this is because we are only interested in discovering visual similarity between images, and having meaningful tags is not necessary for that.

To do the teaching and because we love Go, it’s easy with the Go SDK to read a CSV file and call teach like this::

func main() {

tbox := tagbox.New("https://localhost:8080")

f, err := os.Open("../reddit.csv")

// ommitted error handler

defer f.Close() r := csv.NewReader(f)

for {

// Columns -> 0:id, 1:url, 2:title

record, err := r.Read()

if err == io.EOF {

break

}

if err != nil {

log.Fatal(err)

}

u, err := url.Parse(record[1])

if err != nil {

continue

}

err = tbox.TeachURL(u, record[0], "other")

if err != nil {

log.Printf("[ERROR] teaching %v -> %vn", record[0], err)

} else {

log.Printf("[INFO] teach %vn", record[0])

}

}

}Save and Load the state of Tagbox

With all that teaching, Tagbox would learn to recognize new patterns and to match similarity, but if the Docker container stops, you will lose all that teaching (this is by design). To prevent that, you can download the state file, and upload it when Tagbox starts up. This approach is how you scale Tagbox horizontally to infinite scale.

So we can get a state file reddit.machinebox.tagbox from:

GET /tagbox/state

When Tagbox starts up we can use MB_TAGBOX_STATE_URLto initialize Tagbox with all the teaching done.

The other option, for demo, given we only have one instance of Tagbox, is to use the SDK to invoke:

POST /tagbox/state

and set the state manually.

In the same way, you can use this method to persist the state in other boxes like Facebox.

This is not the only way to persist the state, we are working on a solution to synchronize the state of instances using Redis.

Get similar images

Now that the teaching is done, getting similar images is as simple as invoking the endpoint to get similar images by id.

GET /tagbox/similar?id={id}&limit={limit}Or similar images by a given URL:

POST https://localhost:8080/tagbox/similar

{"url": “https://machinebox.io/samples/images/monkey.jpg"}That will get us related images, ordered by confidence:

{

“success”: true,

“tagsCount”: 4,

“similar”: [

{“tag”: “other”,“confidence”: 0.6018398787033975,“id”: “72rr9o”},

{“tag”: “other”,“confidence”: 0.5670787371155419,“id”: “72ed1s”},

{ ... }

]

}

In our little app in Go, we can write a http handler that does all the work, for us:

func (s *Server) handleSimilarImages(w http.ResponseWriter, r *http.Request) {

urlStr := r.URL.Query().Get(“url”)

u, err := url.Parse(urlStr)

if err != nil {

http.Error(w, err.Error(), http.StatusBadRequest)

return

}

tags, err := s.tagbox.SimilarURL(u)

if err != nil {

http.Error(w, err.Error(), http.StatusBadRequest)

return

}

var res struct {

Items []Item `json:”items”`

}

for _, tag := range tags {

item := s.items[tag.ID]

item.Confidence = tag.Confidence

res.Items = append(res.Items, item)

}

w.Header().Set(“Content-Type”, “application/json; charset=utf-8”)

if err := json.NewEncoder(w).Encode(res); err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

}Wrap up

With just to simple concepts like teach Tagbox about images, and get similar images, we can build a rich and very engaging User Experience.

You can start using Tagbox right now, in your own infrastructure, with your own rules:

- Create and account, and you’ll be able to implement this kinds of features on minutes.

- Explore Visual Search on Github to see all the code.